Introduction

My goal from this post is to simplify machine learning as much as possible. I have summarized what’s considered to be a summary in a question/answer form in order to let interested people get the big picture rapidly.

What is Machine Learning?

Machine learning is the study of computer algorithms to make the computer learn stuff. The learning is based on examples, direct experience or instruction. In general, machine learning is about learning to do better in the future based on what was experienced in the past.

What’s its relation to Artificial Intelligence?

Machine learning is a core subarea of Artificial Intelligence (AI) because:

- It is very unlikely that we will be able to build any kind of intelligent system capable of any of the facilities that we associate with intelligence, such as language or vision, without using learning to get there. These tasks are otherwise simply too difficult to solve.

- We would not consider a system to be truly intelligent if it were incapable of learning since learning is at the core of intelligence.

Although a subarea of AI, machine learning also intersects broadly with other fields, especially statistics, but also mathematics, physics, theoretical computer science and more.

Examples of machine learning applications ?

Optical Character Recognition (OCR) – Face Detection – Spam Filtering- Topic Spotting – Spoken language understanding – Medical Diagnosis – Customer Segmentation – Fraud Detection – Weather Prediction

Machine learning general approaches ?

Supervised Learning

Supervised learning simply makes the computer learn by showing it examples. An example of this could be telling the computer that a specific handwritten “Z” is really the letter “Z”. So afterward, when the computer is being questioned if this letter is a “Z” or not, it can answer.

A supervised learning problem could either be a classification or a regression. In classification, we want to categorize objects into fixed categories. In regression, on the other hand, we are trying to predict a real value. For instance, we may wish to predict how much it will rain tomorrow.

Unsupervised Learning

Unsupervised learning simple makes the computer divide – on its own – a set of objects into a number of groups based on the differences between them. For example if a group of fruit (cucumber and tomatoes ) are the set of objects introduced, the computer – based on the difference in color, size and smell – will tell that certain objects (which it didn’t know they’re named Cucumbers) belong to a certain group and other objects (which it didn’t know they’re named tomatoes) belong to an another certain group.

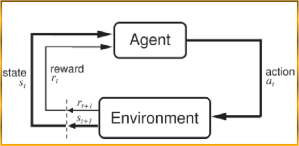

Reinforcement Learning

Sometimes, it’s not a single action (such as figuring out the type of the fruit) that is important, what is important is the policy that is the sequence of actions to reach the goal. There is no such thing as the best action; an action is good if it’s part of a good policy. A good example is game playing where a single move by itself is not that important; it is the sequence of right moves that is good.

A simple example of a machine learning problem ?

In Figure 1, supervised learning is demonstrated; notice that it consists of 2 phases (that could be done at the same time) :

- Training phase: where the computer learns what the right things to do are. As you can see, the computer learns by an example that bats, leopards, zebras and mice are land mammals (+ve sign). On the other hand, ants, dolphins, sea lions, sharks and chicken are not (-ve sign)

- Testing phase: where the computer evaluates what it has learnt. It’s asked to state whether the tiger, tuna and platypus are land mammals or not .

Basic Definitions for a supervised learning classification problem

- An example (sometimes also called an instance) is the object that is being classified. For instance, in OCR, the images are the examples.

- An example is described by a set of attributes, also known as features or variables. For instance, in medical diagnosis, a patient might be described by attributes such as gender, age, weight, blood pressure, body temperature, etc.

- The label is the category that we are trying to predict. For instance, in OCR, the labels are the possible letters or digits being represented. During training, the learning algorithm is supplied with labeled examples, while during testing, only unlabeled examples are provided.

- The rule used for mapping from an example to a label is called a concept.

3 conditions for learning to succeed

There are 3 conditions that must be met for learning to succeed.

- We need enough data

- We need to find a rule (concept) that makes a low number of mistakes on the training data.

- We need that rule to be as simple as possible

Note that the last two requirements are typically in conflict with one another: we sometimes can only find a rule that makes a low number of mistakes by choosing a rule that is more complex, and conversely, choosing a simple rule can sometimes come at the cost of allowing more mistakes on the training data. Finding the right balance is perhaps the most central problem of machine learning. The notion that simple rules should be preferred is often referred to as “Occam’s razor.”

References

Rob Schapire, COS 511: THEORETICAL MACHINE LEARNING, LECTURE 1

Ethem Alpaydin , Introduction to machine learning , 2004.