Introduction to the introduction

Greetings! Today I talk about the AI topic I’ m most concerned about nowadays. It’s RL, CBR, hybrid CBR/RL Techniques and the comparison of CBR and RL techniques. According to my information the Hybridization of CBR with RL is a relatively modern research topic (since 2005). However, I have never seen any document comparing RL to CBR (I’d love to see one).

I will give a slight introduction today.

Case based reasoning

Case-based reasoning (CBR) a Machine Learning Technique which aims to solve a new problem (case) using a database (case-base) of old problems (cases). So, it depends on past Experience.

Reinforcement learning

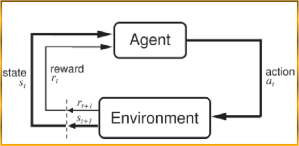

Reinforcement Learning (RL) is a sub-science of Machine Learning. It’s considered a Hybrid of supervised and unsupervised Learning. It simulates the human learning based on trial and error.

- NB: For people unaware of the above two techniques, please read more about them before proceeding.

Can we compare CBR to RL?

What I think is … Yes we can, some people say “RL is a problem but CBR is a solution to a problem so how can you compare them?” I shall answer: “When I mean RL I mean implicitly RL techniques such as TD-Learning and Q-Learning”. CBR solves the learning problem by depending on past experience while RL solves the learning problem depending on trial and error.

How are CBR and RL similar anyway?

- In CBR we have the Case-Base, In Reinforcement Learning we have the state-action space. Each case consists of the problem and its solution. In RL the action is considered the solution to the current state too.

- In CBR there could be a value for each case that measures the performance of this case; in RL each state-action pair has its value.

- In RL rewards from the environment are the way of updating the state-action pairs’ values. In CBR there are no rewards but after applying each case a revision process is performed to the case after testing it to update its performance value.

- In CBR retrieving the case is done in RL under the name of “the policy of choosing actions from the action space”

- In CBR adaptation is performed after retrieving the action, in RL no adaptation is performed because the action-space contains ALL the possible solutions.

And there are more examples to say but those are enough.

So Are CBR and RL techniques the same thing?

Of course not, there are many differences between them. CBR solves the learning problem by depending on past experience while RL solves the learning problem depending on trial and error.

What is the significance of hybrid CBR/RL Techniques?

It’s as if we make the computer learn BOTH by trial and error and past experience. Of-course this often leads to better results. We can say that both complete each other.

How is CBR hybridized with RL and vice versa?

CBR needs RL techniques in the revising phase. Q-learning is used in this paper: “Transfer Learning in Real-Time Strategy Games Using Hybrid CBR/RL -2007″ , and another example is the master “A CBR/RL system for learning micromanagement in real-time strategy games – 2009″

RL needs CBR for function approximation; an example is the paper “CBR for State Value Function Approximation in Reinforcement Learning – 2005″

RL needs CBR for learning continuous action model (also approximation), an example is: “Learning Continuous Action Models in a Real-Time Strategy Environment – 2008″

Heuristically Accelerated RL – One of the Heuristics here is the case-base, an example is “Improving Reinforcement Learning by using Case Based Heuristics – 2009”

Continuous Case Based Reasoning – 1993

Experiments with reinforcement learning in problems with continuous state and action-spaces – 1997

A new heuristic approach for dual control – 1997

So the questions here are …

- When to use Reinforcement Learning and when to use Case-Based Reasoning?

- Are there Cases where Applying one of them is completely refused from the technical point of view?

- If we are dealing with infinite number of state-action pairs, what is the preferred solution? Could both techniques serve well separately?

- How can we make the best use of Hybrid CBR/RL approach to tackle the problem?

I’ll do my best to answer them in future post(s) .However; I’d be delighted if an expert could answer now.